remove gateway completely (#15929)

This commit is contained in:

@@ -2,8 +2,6 @@

|

||||

|

||||

Events occurring on objects in a bucket can be monitored using bucket event notifications.

|

||||

|

||||

> NOTE: Gateway mode does not support bucket notifications (except NAS gateway).

|

||||

|

||||

Various event types supported by MinIO server are

|

||||

|

||||

| Supported Object Event Types | | |

|

||||

|

||||

@@ -4,8 +4,6 @@

|

||||

|

||||

Buckets can be configured to have `Hard` quota - it disallows writes to the bucket after configured quota limit is reached.

|

||||

|

||||

> NOTE: Bucket quotas are not supported under gateway or standalone single disk deployments.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Install MinIO - [MinIO Quickstart Guide](https://min.io/docs/minio/linux/index.html#procedure).

|

||||

|

||||

@@ -116,10 +116,6 @@ Below is a list of common files and content-types which are typically not suitab

|

||||

All files with these extensions and mime types are excluded from compression,

|

||||

even if compression is enabled for all types.

|

||||

|

||||

### 5. Notes

|

||||

|

||||

- MinIO does not support compression for Gateway implementations.

|

||||

|

||||

## To test the setup

|

||||

|

||||

To test this setup, practice put calls to the server using `mc` and use `mc ls` on

|

||||

|

||||

@@ -89,38 +89,6 @@ MINIO_STORAGE_CLASS_RRS (string) set the parity count for reduced redun

|

||||

MINIO_STORAGE_CLASS_COMMENT (sentence) optionally add a comment to this setting

|

||||

```

|

||||

|

||||

### Cache

|

||||

|

||||

MinIO provides caching storage tier for primarily gateway deployments, allowing you to cache content for faster reads, cost savings on repeated downloads from the cloud.

|

||||

|

||||

```

|

||||

KEY:

|

||||

cache add caching storage tier

|

||||

|

||||

ARGS:

|

||||

drives* (csv) comma separated mountpoints e.g. "/optane1,/optane2"

|

||||

expiry (number) cache expiry duration in days e.g. "90"

|

||||

quota (number) limit cache drive usage in percentage e.g. "90"

|

||||

exclude (csv) comma separated wildcard exclusion patterns e.g. "bucket/*.tmp,*.exe"

|

||||

after (number) minimum number of access before caching an object

|

||||

comment (sentence) optionally add a comment to this setting

|

||||

```

|

||||

|

||||

or environment variables

|

||||

|

||||

```

|

||||

KEY:

|

||||

cache add caching storage tier

|

||||

|

||||

ARGS:

|

||||

MINIO_CACHE_DRIVES* (csv) comma separated mountpoints e.g. "/optane1,/optane2"

|

||||

MINIO_CACHE_EXPIRY (number) cache expiry duration in days e.g. "90"

|

||||

MINIO_CACHE_QUOTA (number) limit cache drive usage in percentage e.g. "90"

|

||||

MINIO_CACHE_EXCLUDE (csv) comma separated wildcard exclusion patterns e.g. "bucket/*.tmp,*.exe"

|

||||

MINIO_CACHE_AFTER (number) minimum number of access before caching an object

|

||||

MINIO_CACHE_COMMENT (sentence) optionally add a comment to this setting

|

||||

```

|

||||

|

||||

#### Etcd

|

||||

|

||||

MinIO supports storing encrypted IAM assets in etcd, if KMS is configured. Please refer to how to encrypt your config and IAM credentials [here](https://github.com/minio/minio/blob/master/docs/kms/IAM.md).

|

||||

@@ -283,8 +251,6 @@ Example: the following setting will decrease the scanner speed by a factor of 3,

|

||||

|

||||

Once set the scanner settings are automatically applied without the need for server restarts.

|

||||

|

||||

> NOTE: Data usage scanner is not supported under Gateway deployments.

|

||||

|

||||

### Healing

|

||||

|

||||

Healing is enabled by default. The following configuration settings allow for more staggered delay in terms of healing. The healing system by default adapts to the system speed and pauses up to '1sec' per object when the system has `max_io` number of concurrent requests. It is possible to adjust the `max_sleep` and `max_io` values thereby increasing the healing speed. The delays between each operation of the healer can be adjusted by the `mc admin config set alias/ heal max_sleep=1s` and maximum concurrent requests allowed before we start slowing things down can be configured with `mc admin config set alias/ heal max_io=30` . By default the wait delay is `1sec` beyond 10 concurrent operations. This means the healer will sleep *1 second* at max for each heal operation if there are more than *10* concurrent client requests.

|

||||

@@ -310,8 +276,6 @@ Example: The following settings will increase the heal operation speed by allowi

|

||||

|

||||

Once set the healer settings are automatically applied without the need for server restarts.

|

||||

|

||||

> NOTE: Healing is not supported for Gateway deployments.

|

||||

|

||||

## Environment only settings (not in config)

|

||||

|

||||

### Browser

|

||||

|

||||

@@ -1,117 +0,0 @@

|

||||

# Disk Caching Design [](https://slack.min.io)

|

||||

|

||||

This document explains some basic assumptions and design approach, limits of the disk caching feature. If you're looking to get started with disk cache, we suggest you go through the [getting started document](https://github.com/minio/minio/blob/master/docs/disk-caching/README.md) first.

|

||||

|

||||

## Supported environment variables

|

||||

|

||||

| Environment | Description |

|

||||

| :---------------------- | ------------------------------------------------------------ |

|

||||

| MINIO_CACHE_DRIVES | list of mounted cache drives or directories separated by "," |

|

||||

| MINIO_CACHE_EXCLUDE | list of cache exclusion patterns separated by "," |

|

||||

| MINIO_CACHE_QUOTA | maximum permitted usage of the cache in percentage (0-100) |

|

||||

| MINIO_CACHE_AFTER | minimum number of access before caching an object |

|

||||

| MINIO_CACHE_WATERMARK_LOW | % of cache quota at which cache eviction stops |

|

||||

| MINIO_CACHE_WATERMARK_HIGH | % of cache quota at which cache eviction starts |

|

||||

| MINIO_CACHE_RANGE | set to "on" or "off" caching of independent range requests per object, defaults to "on" |

|

||||

| MINIO_CACHE_COMMIT | set to 'writeback' or 'writethrough' for upload caching |

|

||||

|

||||

## Use-cases

|

||||

|

||||

The edge serves as a gateway cache, creating an intermediary between the application and the public cloud. In this scenario, the gateways are backed by servers with a number of either hard drives or flash drives and are deployed in edge data centers. All access to the public cloud goes through these caches (write-through cache), so data is uploaded to the public cloud with strict consistency guarantee. Subsequent reads are served from the cache based on ETAG match or the cache control headers.

|

||||

|

||||

This architecture reduces costs by decreasing the bandwidth needed to transfer data, improves performance by keeping data cached closer to the application and also reduces the operational cost - the data is still kept in the public cloud, just cached at the edge.

|

||||

|

||||

Following example shows:

|

||||

|

||||

- start MinIO gateway to s3 with edge caching enabled on '/mnt/drive1', '/mnt/drive2' and '/mnt/export1 ... /mnt/export24' drives

|

||||

- exclude all objects under 'mybucket', exclude all objects with '.pdf' as extension.

|

||||

- cache only those objects accessed atleast 3 times.

|

||||

- cache garbage collection triggers in at high water mark (i.e. cache disk usage reaches 90% of cache quota) or at 72% and evicts oldest objects by access time until low watermark is reached ( 70% of cache quota) , i.e. 63% of disk usage.

|

||||

|

||||

```sh

|

||||

export MINIO_CACHE_DRIVES="/mnt/drive1,/mnt/drive2,/mnt/export{1..24}"

|

||||

export MINIO_CACHE_EXCLUDE="mybucket/*,*.pdf"

|

||||

export MINIO_CACHE_QUOTA=80

|

||||

export MINIO_CACHE_AFTER=3

|

||||

export MINIO_CACHE_WATERMARK_LOW=70

|

||||

export MINIO_CACHE_WATERMARK_HIGH=90

|

||||

|

||||

minio gateway s3 https://s3.amazonaws.com

|

||||

```

|

||||

|

||||

### Run MinIO gateway with cache on Docker Container

|

||||

|

||||

### Stable

|

||||

|

||||

Cache drives need to have `strictatime` or `relatime` enabled for disk caching feature. In this example, mount the xfs file system on /mnt/cache with `strictatime` or `relatime` enabled.

|

||||

|

||||

```sh

|

||||

truncate -s 4G /tmp/data

|

||||

```

|

||||

|

||||

### Build xfs filesystem on /tmp/data

|

||||

|

||||

```

|

||||

mkfs.xfs /tmp/data

|

||||

```

|

||||

|

||||

### Create mount dir

|

||||

|

||||

```

|

||||

sudo mkdir /mnt/cache #

|

||||

```

|

||||

|

||||

### Mount xfs on /mnt/cache with atime

|

||||

|

||||

```

|

||||

sudo mount -o relatime /tmp/data /mnt/cache

|

||||

```

|

||||

|

||||

### Start using the cached drive with S3 gateway

|

||||

|

||||

```

|

||||

podman run --net=host -e MINIO_ROOT_USER={s3-access-key} -e MINIO_ROOT_PASSWORD={s3-secret-key} \

|

||||

-e MINIO_CACHE_DRIVES=/cache -e MINIO_CACHE_QUOTA=99 -e MINIO_CACHE_AFTER=0 \

|

||||

-e MINIO_CACHE_WATERMARK_LOW=90 -e MINIO_CACHE_WATERMARK_HIGH=95 \

|

||||

-v /mnt/cache:/cache quay.io/minio/minio gateway s3 --console-address ":9001"

|

||||

```

|

||||

|

||||

## Assumptions

|

||||

|

||||

- Disk cache quota defaults to 80% of your drive capacity.

|

||||

- The cache drives are required to be a filesystem mount point with [`atime`](http://kerolasa.github.io/filetimes.html) support to be enabled on the drive. Alternatively writable directories with atime support can be specified in MINIO_CACHE_DRIVES

|

||||

- Garbage collection sweep happens whenever cache disk usage reaches high watermark with respect to the configured cache quota , GC evicts least recently accessed objects until cache low watermark is reached with respect to the configured cache quota. Garbage collection runs a cache eviction sweep at 30 minute intervals.

|

||||

- An object is only cached when drive has sufficient disk space.

|

||||

|

||||

## Behavior

|

||||

|

||||

Disk caching caches objects for **downloaded** objects i.e

|

||||

|

||||

- Caches new objects for entries not found in cache while downloading. Otherwise serves from the cache.

|

||||

- Bitrot protection is added to cached content and verified when object is served from cache.

|

||||

- When an object is deleted, corresponding entry in cache if any is deleted as well.

|

||||

- Cache continues to work for read-only operations such as GET, HEAD when backend is offline.

|

||||

- Cache-Control and Expires headers can be used to control how long objects stay in the cache. ETag of cached objects are not validated with backend until expiry time as per the Cache-Control or Expires header is met.

|

||||

- All range GET requests are cached by default independently, this may be not desirable in all situations when cache storage is limited and where downloading an entire object at once might be more optimal. To optionally turn this feature off, and allow downloading entire object in the background `export MINIO_CACHE_RANGE=off`.

|

||||

- To ensure security guarantees, encrypted objects are normally not cached. However, if you wish to encrypt cached content on disk, you can set MINIO_CACHE_ENCRYPTION_SECRET_KEY environment variable to set a cache KMS

|

||||

master key to automatically encrypt all cached content.

|

||||

|

||||

Note that cache KMS master key is not recommended for use in production deployments. If the MinIO server/gateway machine is ever compromised, the cache KMS master key must also be treated as compromised.

|

||||

Support for external KMS to manage cache KMS keys is on the roadmap,and would be ideal for production use cases.

|

||||

|

||||

- `MINIO_CACHE_COMMIT` setting of `writethrough` allows caching of single and multipart uploads synchronously if enabled. By default, however single PUT operations are cached asynchronously on write without any special setting.

|

||||

|

||||

- Partially cached stale uploads older than 24 hours are automatically cleaned up.

|

||||

|

||||

- Expiration happens automatically based on the configured interval as explained above, frequently accessed objects stay alive in cache for a significantly longer time.

|

||||

|

||||

> NOTE: `MINIO_CACHE_COMMIT` also has a value of `writeback` which allows staging single uploads in cache before committing to remote. It is not possible to stage multipart uploads in the cache for consistency reasons - hence, multipart uploads will be cached synchronously even if `writeback` is set.

|

||||

|

||||

### Crash Recovery

|

||||

|

||||

Upon restart of minio gateway after a running minio process is killed or crashes, disk caching resumes automatically. The garbage collection cycle resumes and any previously cached entries are served from cache.

|

||||

|

||||

## Limits

|

||||

|

||||

- Bucket policies are not cached, so anonymous operations are not supported when backend is offline.

|

||||

- Objects are distributed using deterministic hashing among the list of configured cache drives. If one or more drives go offline, or cache drive configuration is altered in any way, performance may degrade to O(n) lookup time depending on the number of disks in cache.

|

||||

@@ -1,45 +0,0 @@

|

||||

# Disk Cache Quickstart Guide [](https://slack.min.io)

|

||||

|

||||

Disk caching feature here refers to the use of caching disks to store content closer to the tenants. For instance, if you access an object from a lets say `gateway s3` setup and download the object that gets cached, each subsequent request on the object gets served directly from the cache drives until it expires. This feature allows MinIO users to have

|

||||

|

||||

- Object to be delivered with the best possible performance.

|

||||

- Dramatic improvements for time to first byte for any object.

|

||||

|

||||

## Get started

|

||||

|

||||

### 1. Prerequisites

|

||||

|

||||

Install MinIO - [MinIO Quickstart Guide](https://min.io/docs/minio/linux/index.html#quickstart-for-linuxe).

|

||||

|

||||

### 2. Run MinIO gateway with cache

|

||||

|

||||

Disk caching can be enabled by setting the `cache` environment variables for MinIO gateway . `cache` environment variables takes the mounted drive(s) or directory paths, any wildcard patterns to exclude from being cached,low and high watermarks for garbage collection and the minimum accesses before caching an object.

|

||||

|

||||

Following example uses `/mnt/drive1`, `/mnt/drive2` ,`/mnt/cache1` ... `/mnt/cache3` for caching, while excluding all objects under bucket `mybucket` and all objects with '.pdf' as extension on a s3 gateway setup. Objects are cached if they have been accessed three times or more.Cache max usage is restricted to 80% of disk capacity in this example. Garbage collection is triggered when high watermark is reached - i.e. at 72% of cache disk usage and clears least recently accessed entries until the disk usage drops to low watermark - i.e. cache disk usage drops to 56% (70% of 80% quota)

|

||||

|

||||

```bash

|

||||

export MINIO_CACHE="on"

|

||||

export MINIO_CACHE_DRIVES="/mnt/drive1,/mnt/drive2,/mnt/cache{1...3}"

|

||||

export MINIO_CACHE_EXCLUDE="*.pdf,mybucket/*"

|

||||

export MINIO_CACHE_QUOTA=80

|

||||

export MINIO_CACHE_AFTER=3

|

||||

export MINIO_CACHE_WATERMARK_LOW=70

|

||||

export MINIO_CACHE_WATERMARK_HIGH=90

|

||||

|

||||

minio gateway s3

|

||||

```

|

||||

|

||||

The `CACHE_WATERMARK` numbers are percentages of `CACHE_QUOTA`.

|

||||

In the example above this means that `MINIO_CACHE_WATERMARK_LOW` is effectively `0.8 * 0.7 * 100 = 56%` and the `MINIO_CACHE_WATERMARK_HIGH` is effectively `0.8 * 0.9 * 100 = 72%` of total disk space.

|

||||

|

||||

### 3. Test your setup

|

||||

|

||||

To test this setup, access the MinIO gateway via browser or [`mc`](https://min.io/docs/minio/linux/reference/minio-mc.html#quickstart). You’ll see the uploaded files are accessible from all the MinIO endpoints.

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [Disk cache design](https://github.com/minio/minio/blob/master/docs/disk-caching/DESIGN.md)

|

||||

- [Use `mc` with MinIO Server](https://min.io/docs/minio/linux/reference/minio-mc.html#quickstart)

|

||||

- [Use `aws-cli` with MinIO Server](https://min.io/docs/minio/linux/integrations/aws-cli-with-minio.html)

|

||||

- [Use `minio-go` SDK with MinIO Server](https://min.io/docs/minio/linux/developers/go/minio-go.html)

|

||||

- [The MinIO documentation website](https://min.io/docs/minio/linux/index.html)

|

||||

@@ -1,15 +0,0 @@

|

||||

# MinIO Gateway [](https://slack.min.io)

|

||||

|

||||

**The MinIO Gateway has been deprecated as of February 2022 and removed from MinIO files as of July 2022.**

|

||||

**See https://blog.min.io/deprecation-of-the-minio-gateway/ for more information.**

|

||||

|

||||

## Support

|

||||

|

||||

Gateway implementations are frozen and are not accepting any new features. Please reports any bugs at <https://github.com/minio/minio/issues> . If you are an existing customer please login to <https://subnet.min.io> for production support.

|

||||

|

||||

## Implementations

|

||||

|

||||

MinIO Gateway adds Amazon S3 compatibility layer to third party NAS and Cloud Storage vendors. MinIO Gateway is implemented to facilitate migration of existing from your existing legacy or cloud vendors to MinIO distributed server deployments.

|

||||

|

||||

- [NAS](https://github.com/minio/minio/blob/master/docs/gateway/nas.md)

|

||||

- [S3](https://github.com/minio/minio/blob/master/docs/gateway/s3.md)

|

||||

@@ -1,114 +0,0 @@

|

||||

# MinIO NAS Gateway [](https://slack.min.io)

|

||||

|

||||

> NAS gateway is deprecated and will be removed in future, no more fresh deployments are supported.

|

||||

|

||||

MinIO Gateway adds Amazon S3 compatibility to NAS storage. You may run multiple minio instances on the same shared NAS volume as a distributed object gateway.

|

||||

|

||||

## Support

|

||||

|

||||

Gateway implementations are frozen and are not accepting any new features. Please reports any bugs at <https://github.com/minio/minio/issues> . If you are an existing customer please login to <https://subnet.min.io> for production support.

|

||||

|

||||

## Run MinIO Gateway for NAS Storage

|

||||

|

||||

### Using Docker

|

||||

|

||||

Please ensure to replace `/shared/nasvol` with actual mount path.

|

||||

|

||||

```

|

||||

podman run \

|

||||

-p 9000:9000 \

|

||||

-p 9001:9001 \

|

||||

--name nas-s3 \

|

||||

-e "MINIO_ROOT_USER=minio" \

|

||||

-e "MINIO_ROOT_PASSWORD=minio123" \

|

||||

-v /shared/nasvol:/container/vol \

|

||||

quay.io/minio/minio gateway nas /container/vol --console-address ":9001"

|

||||

```

|

||||

|

||||

### Using Binary

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=minio

|

||||

export MINIO_ROOT_PASSWORD=minio123

|

||||

minio gateway nas /shared/nasvol

|

||||

```

|

||||

|

||||

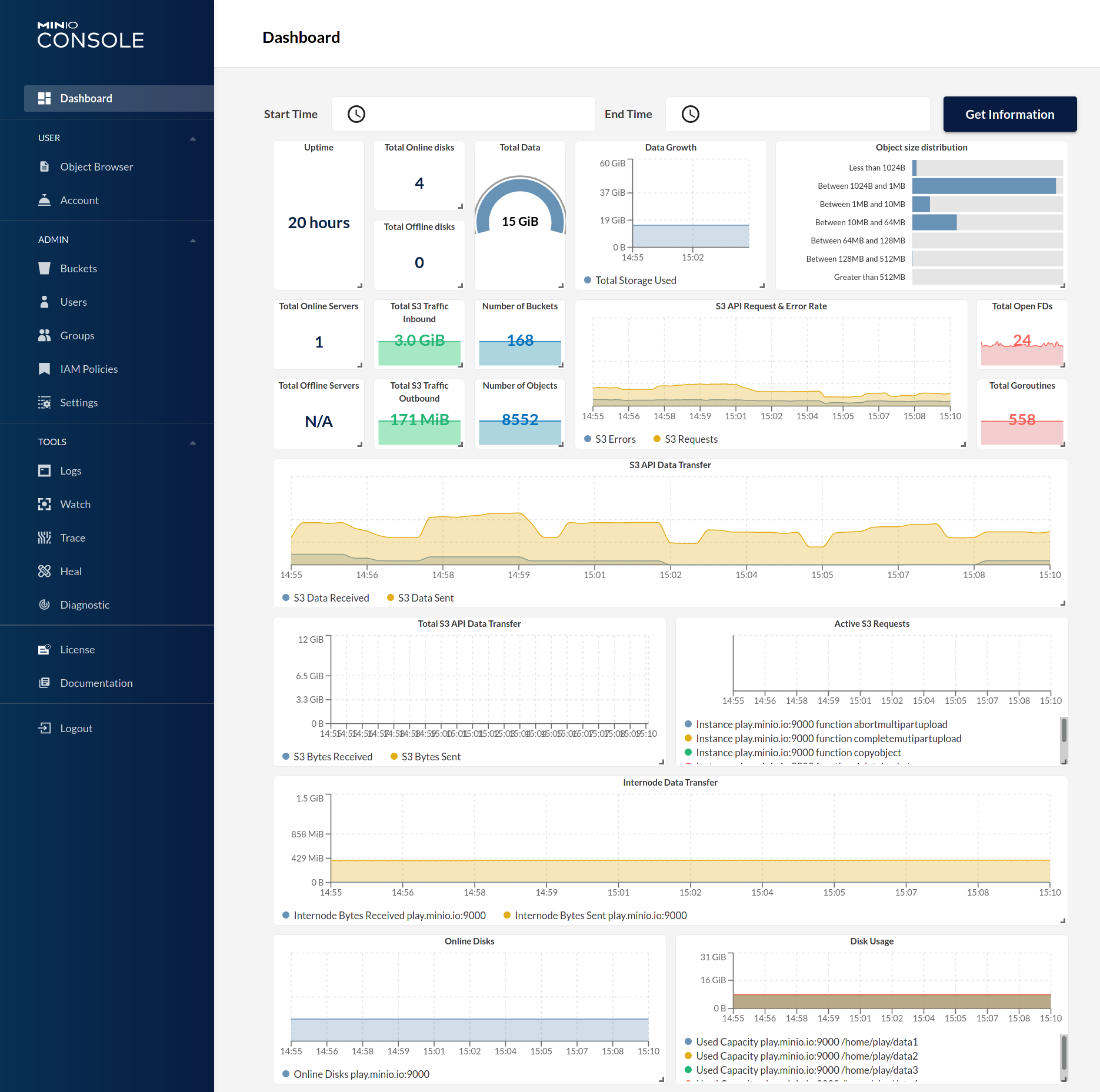

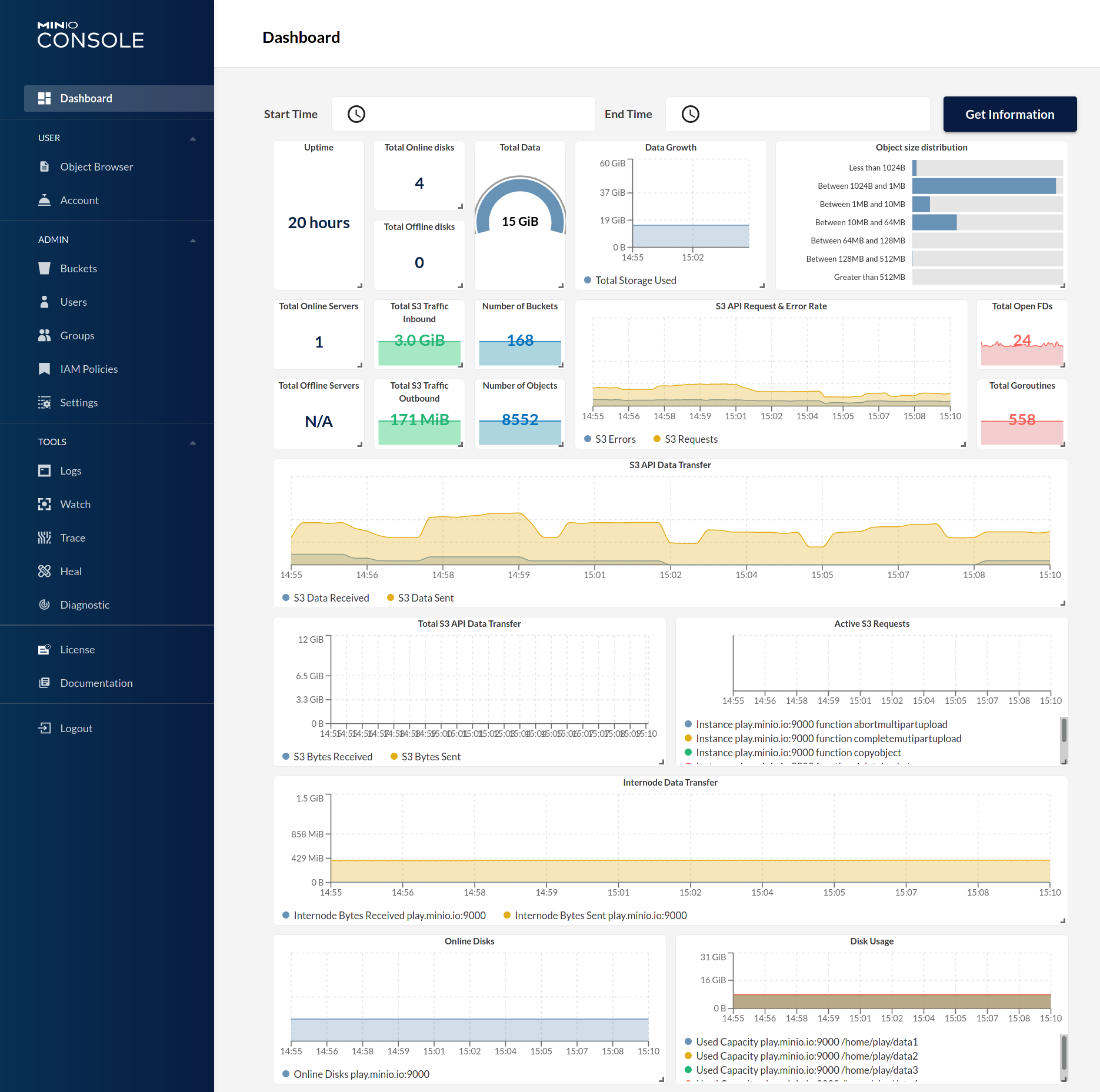

## Test using MinIO Console

|

||||

|

||||

MinIO Gateway comes with an embedded web based object browser. Point your web browser to <http://127.0.0.1:9000> to ensure that your server has started successfully.

|

||||

|

||||

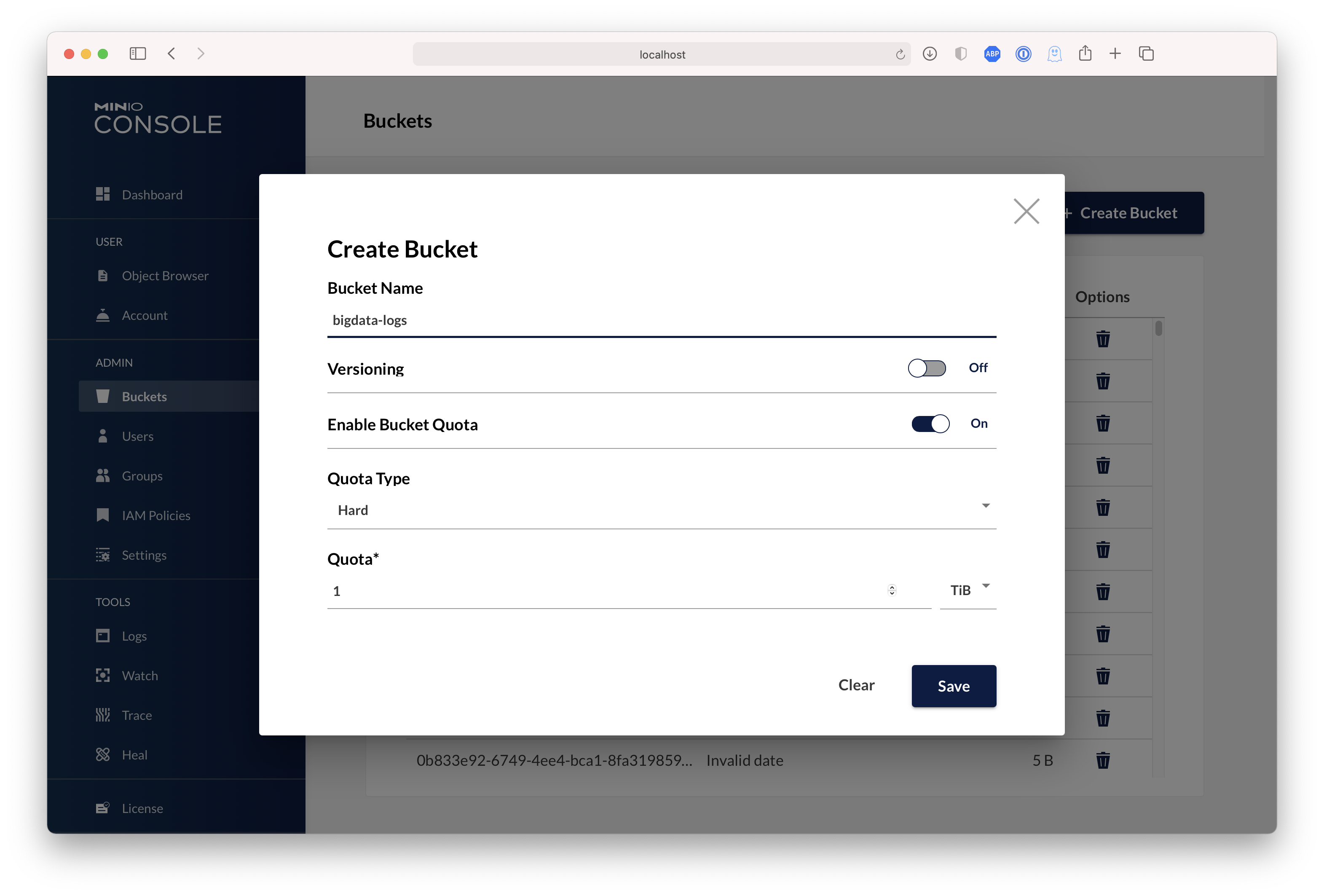

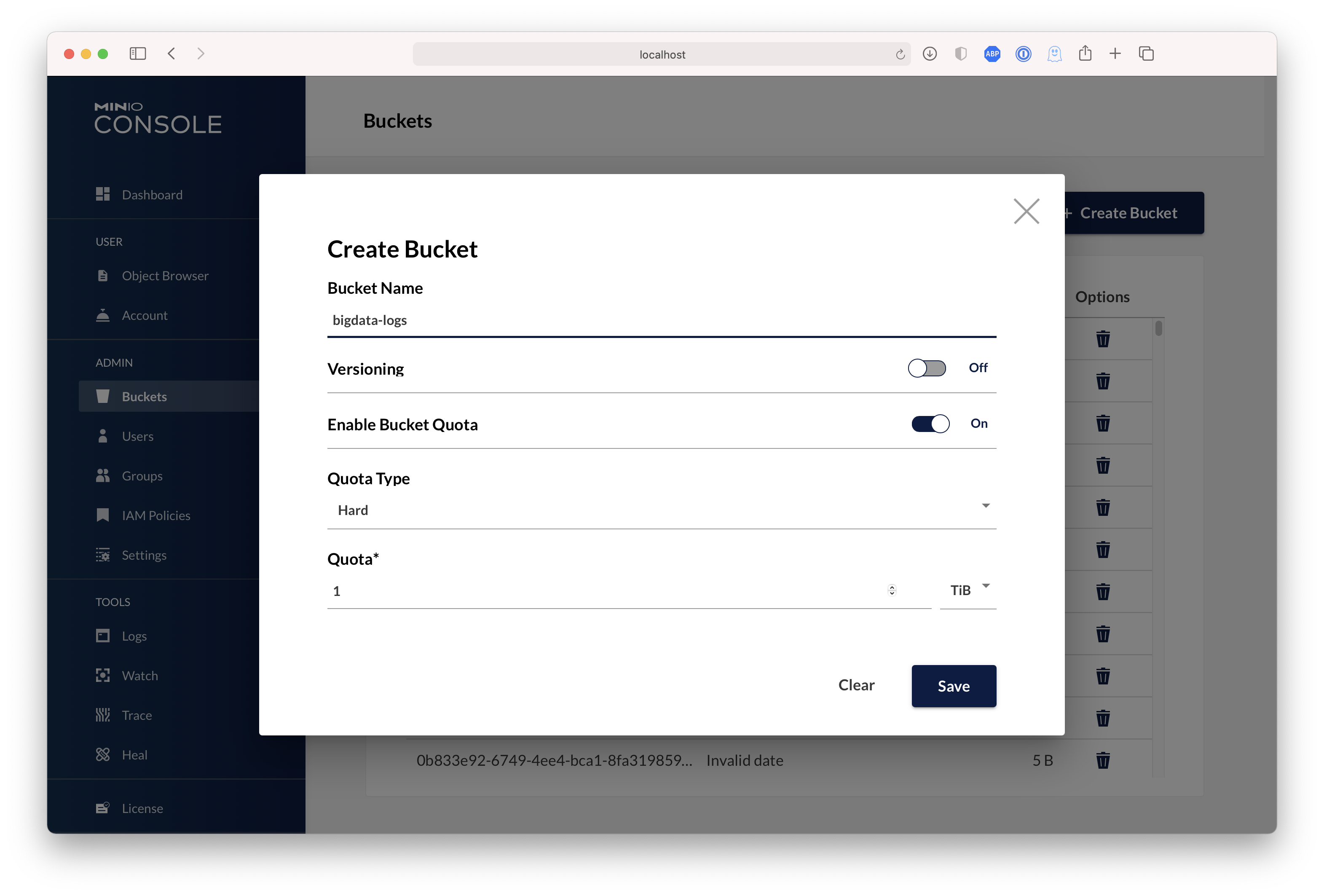

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

||||

## Test using MinIO Client `mc`

|

||||

|

||||

`mc` provides a modern alternative to UNIX commands such as ls, cat, cp, mirror, diff, etc. It supports filesystems and Amazon S3 compatible cloud storage services.

|

||||

|

||||

### Configure `mc`

|

||||

|

||||

```

|

||||

mc alias set mynas http://gateway-ip:9000 access_key secret_key

|

||||

```

|

||||

|

||||

### List buckets on nas

|

||||

|

||||

```

|

||||

mc ls mynas

|

||||

[2017-02-22 01:50:43 PST] 0B ferenginar/

|

||||

[2017-02-26 21:43:51 PST] 0B my-bucket/

|

||||

[2017-02-26 22:10:11 PST] 0B test-bucket1/

|

||||

```

|

||||

|

||||

## Breaking changes

|

||||

|

||||

There will be a breaking change after the release version 'RELEASE.2020-06-22T03-12-50Z'.

|

||||

|

||||

### The file-based config settings are deprecated in NAS

|

||||

|

||||

The support for admin config APIs will be removed. This will include getters and setters like `mc admin config get` and `mc admin config` and any other `mc admin config` options. The reason for this change is to avoid un-necessary reloads of the config from the disk. And to comply with the Environment variable based settings like other gateways.

|

||||

|

||||

### Migration guide

|

||||

|

||||

The users who have been using the older config approach should migrate to ENV settings by setting environment variables accordingly.

|

||||

|

||||

For example,

|

||||

|

||||

Consider the following webhook target config.

|

||||

|

||||

```

|

||||

notify_webhook:1 endpoint=http://localhost:8080/ auth_token= queue_limit=0 queue_dir=/tmp/webhk client_cert= client_key=

|

||||

```

|

||||

|

||||

The corresponding environment variable setting can be

|

||||

|

||||

```

|

||||

export MINIO_NOTIFY_WEBHOOK_ENABLE_1=on

|

||||

export MINIO_NOTIFY_WEBHOOK_ENDPOINT_1=http://localhost:8080/

|

||||

export MINIO_NOTIFY_WEBHOOK_QUEUE_DIR_1=/tmp/webhk

|

||||

```

|

||||

|

||||

> NOTE: Please check the docs for the corresponding ENV setting. Alternatively, we can obtain other ENVs in the form `mc admin config set alias/ <sub-sys> --env`

|

||||

|

||||

## Symlink support

|

||||

|

||||

NAS gateway implementation allows symlinks on regular files.

|

||||

|

||||

### Behavior

|

||||

|

||||

- For reads symlinks resolve to the file the symlink points to.

|

||||

- For deletes

|

||||

- Deleting a symlink deletes the symlink but not the real file to which the symlink points.

|

||||

- Deleting the real file a symlink points to automatically makes the dangling symlink invisible.

|

||||

|

||||

#### Caveats

|

||||

|

||||

- Disallows follow of directory symlinks to avoid security issues, and leaving them as is on namespace makes them very inconsistent.

|

||||

|

||||

*Directory symlinks are not and will not be supported as there are no safe ways to handle them.*

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [`mc` command-line interface](https://min.io/docs/minio/linux/reference/minio-mc.html#quickstart)

|

||||

- [`aws` command-line interface](https://min.io/docs/minio/linux/integrations/aws-cli-with-minio.html)

|

||||

- [`minio-go` Go SDK](https://min.io/docs/minio/linux/developers/go/minio-go.html)

|

||||

@@ -1,160 +0,0 @@

|

||||

# MinIO S3 Gateway [](https://slack.min.io)

|

||||

|

||||

MinIO S3 Gateway adds MinIO features like MinIO Console and disk caching to AWS S3 or any other AWS S3 compatible service.

|

||||

|

||||

## Support

|

||||

|

||||

Gateway implementations are frozen and are not accepting any new features. Please reports any bugs at <https://github.com/minio/minio/issues> . If you are an existing customer please login to <https://subnet.min.io> for production support.

|

||||

|

||||

## Run MinIO Gateway for AWS S3

|

||||

|

||||

As a prerequisite to run MinIO S3 gateway, you need valid AWS S3 access key and secret key by default. Optionally you can also set custom access/secret key, when you have rotating AWS IAM credentials or AWS credentials through environment variables (i.e. AWS_ACCESS_KEY_ID)

|

||||

|

||||

### Using Docker

|

||||

|

||||

```

|

||||

podman run \

|

||||

-p 9000:9000 \

|

||||

-p 9001:9001 \

|

||||

--name minio-s3 \

|

||||

-e "MINIO_ROOT_USER=aws_s3_access_key" \

|

||||

-e "MINIO_ROOT_PASSWORD=aws_s3_secret_key" \

|

||||

quay.io/minio/minio gateway s3 --console-address ":9001"

|

||||

```

|

||||

|

||||

### Using Binary

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=aws_s3_access_key

|

||||

export MINIO_ROOT_PASSWORD=aws_s3_secret_key

|

||||

minio gateway s3

|

||||

```

|

||||

|

||||

### Using Binary in EC2

|

||||

|

||||

Using IAM rotating credentials for AWS S3

|

||||

|

||||

If you are using an S3 enabled IAM role on an EC2 instance for S3 access, MinIO will still require env vars MINIO_ROOT_USER and MINIO_ROOT_PASSWORD to be set for its internal use. These may be set to any value which meets the length requirements. Access key length should be at least 3, and secret key length at least 8 characters.

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=custom_access_key

|

||||

export MINIO_ROOT_PASSWORD=custom_secret_key

|

||||

minio gateway s3

|

||||

```

|

||||

|

||||

MinIO gateway will automatically look for list of credential styles in following order, if your backend URL is AWS S3.

|

||||

|

||||

- AWS env vars (i.e. AWS_ACCESS_KEY_ID)

|

||||

- AWS creds file (i.e. AWS_SHARED_CREDENTIALS_FILE or ~/.aws/credentials)

|

||||

- IAM profile based credentials. (performs an HTTP call to a pre-defined endpoint, only valid inside configured ec2 instances)

|

||||

|

||||

Minimum permissions required if you wish to provide restricted access with your AWS credentials, please make sure you have following IAM policies attached for your AWS user or roles.

|

||||

|

||||

```json

|

||||

{

|

||||

"Version": "2012-10-17",

|

||||

"Statement": [

|

||||

{

|

||||

"Effect": "Allow",

|

||||

"Action": [

|

||||

"s3:GetBucketLocation"

|

||||

],

|

||||

"Resource": [

|

||||

"arn:aws:s3:::*"

|

||||

]

|

||||

},

|

||||

{

|

||||

"Effect": "Allow",

|

||||

"Action": [

|

||||

"s3:PutObject",

|

||||

"s3:GetObject",

|

||||

"s3:ListBucket",

|

||||

"s3:DeleteObject",

|

||||

"s3:GetBucketAcl",

|

||||

],

|

||||

"Resource": [

|

||||

"arn:aws:s3:::mybucket",

|

||||

"arn:aws:s3:::mybucket/*"

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

## Run MinIO Gateway for AWS S3 compatible services

|

||||

|

||||

As a prerequisite to run MinIO S3 gateway on an AWS S3 compatible service, you need valid access key, secret key and service endpoint.

|

||||

|

||||

## Run MinIO Gateway with double-encryption

|

||||

|

||||

MinIO gateway to S3 supports encryption of data at rest. Three types of encryption modes are supported

|

||||

|

||||

- encryption can be set to ``pass-through`` to backend only for SSE-S3, SSE-C is not allowed passthrough.

|

||||

- ``single encryption`` (at the gateway)

|

||||

- ``double encryption`` (single encryption at gateway and pass through to backend)

|

||||

|

||||

This can be specified by setting MINIO_GATEWAY_SSE environment variable. If MINIO_GATEWAY_SSE and KMS are not setup, all encryption headers are passed through to the backend. If KMS environment variables are set up, ``single encryption`` is automatically performed at the gateway and encrypted object is saved at the backend.

|

||||

|

||||

To specify ``double encryption``, MINIO_GATEWAY_SSE environment variable needs to be set to "s3" for sse-s3

|

||||

and "c" for sse-c encryption. More than one encryption option can be set, delimited by ";". Objects are encrypted at the gateway and the gateway also does a pass-through to backend. Note that in the case of SSE-C encryption, gateway derives a unique SSE-C key for pass through from the SSE-C client key using a key derivation function (KDF).

|

||||

|

||||

```sh

|

||||

curl -sSL --tlsv1.2 \

|

||||

-O 'https://raw.githubusercontent.com/minio/kes/master/root.key' \

|

||||

-O 'https://raw.githubusercontent.com/minio/kes/master/root.cert'

|

||||

```

|

||||

|

||||

```sh

|

||||

export MINIO_GATEWAY_SSE="s3;c"

|

||||

export MINIO_KMS_KES_ENDPOINT=https://play.min.io:7373

|

||||

export MINIO_KMS_KES_KEY_FILE=root.key

|

||||

export MINIO_KMS_KES_CERT_FILE=root.cert

|

||||

export MINIO_KMS_KES_KEY_NAME=my-minio-key

|

||||

minio gateway s3

|

||||

```

|

||||

|

||||

### Using Docker (double encryption)

|

||||

|

||||

```

|

||||

podman run -p 9000:9000 --name minio-s3 \

|

||||

-e "MINIO_ROOT_USER=access_key" \

|

||||

-e "MINIO_ROOT_PASSWORD=secret_key" \

|

||||

quay.io/minio/minio gateway s3 https://s3_compatible_service_endpoint:port

|

||||

```

|

||||

|

||||

### Using Binary (double encryption)

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=access_key

|

||||

export MINIO_ROOT_PASSWORD=secret_key

|

||||

minio gateway s3 https://s3_compatible_service_endpoint:port

|

||||

```

|

||||

|

||||

## MinIO Caching

|

||||

|

||||

MinIO edge caching allows storing content closer to the applications. Frequently accessed objects are stored in a local disk based cache. Edge caching with MinIO gateway feature allows

|

||||

|

||||

- Dramatic improvements for time to first byte for any object.

|

||||

- Avoid S3 [data transfer charges](https://aws.amazon.com/s3/pricing/).

|

||||

|

||||

## MinIO Console

|

||||

|

||||

MinIO Gateway comes with an embedded web based object browser. Point your web browser to <http://127.0.0.1:9000> to ensure that your server has started successfully.

|

||||

|

||||

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

||||

With MinIO S3 gateway, you can use MinIO Console to explore AWS S3 based objects.

|

||||

|

||||

### Known limitations

|

||||

|

||||

- Bucket Notification APIs are not supported.

|

||||

- Bucket Locking APIs are not supported.

|

||||

- Versioned buckets on AWS S3 are not supported from gateway layer. Gateway is meant to be used with non-versioned buckets.

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [`mc` command-line interface](https://min.io/docs/minio/linux/reference/minio-mc.html)

|

||||

- [`aws` command-line interface](https://min.io/docs/minio/linux/integrations/aws-cli-with-minio.html)

|

||||

- [`minio-go` Go SDK](https://min.io/docs/minio/linux/developers/go/minio-go.html)

|

||||

@@ -4,7 +4,7 @@ MinIO server exposes three un-authenticated, healthcheck endpoints liveness prob

|

||||

|

||||

## Liveness probe

|

||||

|

||||

This probe always responds with '200 OK'. Only fails if 'etcd' is configured and unreachable. This behavior is specific to gateway. When liveness probe fails, Kubernetes like platforms restart the container.

|

||||

This probe always responds with '200 OK'. Only fails if 'etcd' is configured and unreachable. When liveness probe fails, Kubernetes like platforms restart the container.

|

||||

|

||||

```

|

||||

livenessProbe:

|

||||

@@ -21,7 +21,7 @@ livenessProbe:

|

||||

|

||||

## Readiness probe

|

||||

|

||||

This probe always responds with '200 OK'. Only fails if 'etcd' is configured and unreachable. This behavior is specific to gateway. When readiness probe fails, Kubernetes like platforms turn-off routing to the container.

|

||||

This probe always responds with '200 OK'. Only fails if 'etcd' is configured and unreachable. When readiness probe fails, Kubernetes like platforms turn-off routing to the container.

|

||||

|

||||

```

|

||||

readinessProbe:

|

||||

|

||||

@@ -10,7 +10,7 @@ In this document we will explain in detail on how to configure multiple users.

|

||||

|

||||

- Install mc - [MinIO Client Quickstart Guide](https://min.io/docs/minio/linux/reference/minio-mc.html#quickstart)

|

||||

- Install MinIO - [MinIO Quickstart Guide](https://min.io/docs/minio/linux/index.html#quickstart-for-linux)

|

||||

- Configure etcd (optional needed only in gateway or federation mode) - [Etcd V3 Quickstart Guide](https://github.com/minio/minio/blob/master/docs/sts/etcd.md)

|

||||

- Configure etcd - [Etcd V3 Quickstart Guide](https://github.com/minio/minio/blob/master/docs/sts/etcd.md)

|

||||

|

||||

### 2. Create a new user with canned policy

|

||||

|

||||

|

||||

@@ -1,138 +0,0 @@

|

||||

# Introduction [](https://slack.min.io)

|

||||

|

||||

This feature allows MinIO to serve a shared NAS drive across multiple MinIO instances. There are no special configuration changes required to enable this feature. Access to files stored on NAS volume are locked and synchronized by default.

|

||||

|

||||

## Motivation

|

||||

|

||||

Since MinIO instances serve the purpose of a single tenant there is an increasing requirement where users want to run multiple MinIO instances on a same backend which is managed by an existing NAS (NFS, GlusterFS, Other distributed filesystems) rather than a local disk. This feature is implemented also with minimal disruption in mind for the user and overall UI.

|

||||

|

||||

## Restrictions

|

||||

|

||||

* A PutObject() is blocked and waits if another GetObject() is in progress.

|

||||

* A CompleteMultipartUpload() is blocked and waits if another PutObject() or GetObject() is in progress.

|

||||

* Cannot run FS mode as a remote disk RPC.

|

||||

|

||||

## How To Run?

|

||||

|

||||

Running MinIO instances on shared backend is no different than running on a stand-alone disk. There are no special configuration changes required to enable this feature. Access to files stored on NAS volume are locked and synchronized by default. Following examples will clarify this further for each operating system of your choice:

|

||||

|

||||

### Ubuntu 16.04 LTS

|

||||

|

||||

Example 1: Start MinIO instance on a shared backend mounted and available at `/path/to/nfs-volume`.

|

||||

|

||||

On linux server1

|

||||

|

||||

```shell

|

||||

minio gateway nas /path/to/nfs-volume

|

||||

```

|

||||

|

||||

On linux server2

|

||||

|

||||

```shell

|

||||

minio gateway nas /path/to/nfs-volume

|

||||

```

|

||||

|

||||

### Windows 2012 Server

|

||||

|

||||

Example 1: Start MinIO instance on a shared backend mounted and available at `\\remote-server\cifs`.

|

||||

|

||||

On windows server1

|

||||

|

||||

```cmd

|

||||

minio.exe gateway nas \\remote-server\cifs\data

|

||||

```

|

||||

|

||||

On windows server2

|

||||

|

||||

```cmd

|

||||

minio.exe gateway nas \\remote-server\cifs\data

|

||||

```

|

||||

|

||||

Alternatively if `\\remote-server\cifs` is mounted as `D:\` drive.

|

||||

|

||||

On windows server1

|

||||

|

||||

```cmd

|

||||

minio.exe gateway nas D:\data

|

||||

```

|

||||

|

||||

On windows server2

|

||||

|

||||

```cmd

|

||||

minio.exe gateway nas D:\data

|

||||

```

|

||||

|

||||

## Architecture

|

||||

|

||||

### POSIX/Win32 Locks

|

||||

|

||||

#### Lock process

|

||||

|

||||

With in the same MinIO instance locking is handled by existing in-memory namespace locks (**sync.RWMutex** et. al). To synchronize locks between many MinIO instances we leverage POSIX `fcntl()` locks on Unixes and on Windows `LockFileEx()` Win32 API. Requesting write lock block if there are any read locks held by neighboring MinIO instance on the same path. So does the read lock if there are any active write locks in-progress.

|

||||

|

||||

#### Unlock process

|

||||

|

||||

Unlocking happens on filesystems locks by just closing the file descriptor (fd) which was initially requested for lock operation. Closing the fd tells the kernel to relinquish all the locks held on the path by the current process. This gets trickier when there are many readers on the same path by the same process, it would mean that closing an fd relinquishes locks for all concurrent readers as well. To properly manage this situation a simple fd reference count is implemented, the same fd is shared between many readers. When readers start closing on the fd we start reducing the reference count, once reference count has reached zero we can be sure that there are no more readers active. So we proceed and close the underlying file descriptor which would relinquish the read lock held on the path.

|

||||

|

||||

This doesn't apply for the writes because there is always one writer and many readers for any unique object.

|

||||

|

||||

### Handling Concurrency

|

||||

|

||||

An example here shows how the contention is handled with GetObject().

|

||||

|

||||

GetObject() holds a read lock on `fs.json`.

|

||||

|

||||

```go

|

||||

fsMetaPath := pathJoin(fs.fsPath, minioMetaBucket, bucketMetaPrefix, bucket, object, fsMetaJSONFile)

|

||||

rlk, err := fs.rwPool.Open(fsMetaPath)

|

||||

if err != nil {

|

||||

return toObjectErr(err, bucket, object)

|

||||

}

|

||||

defer rlk.Close()

|

||||

|

||||

... you can perform other operations here ...

|

||||

|

||||

_, err = io.Copy(writer, reader)

|

||||

|

||||

... after successful copy operation unlocks the read lock ...

|

||||

```

|

||||

|

||||

A concurrent PutObject is requested on the same object, PutObject() attempts a write lock on `fs.json`.

|

||||

|

||||

```go

|

||||

fsMetaPath := pathJoin(fs.fsPath, minioMetaBucket, bucketMetaPrefix, bucket, object, fsMetaJSONFile)

|

||||

wlk, err := fs.rwPool.Create(fsMetaPath)

|

||||

if err != nil {

|

||||

return ObjectInfo{}, toObjectErr(err, bucket, object)

|

||||

}

|

||||

// This close will allow for locks to be synchronized on `fs.json`.

|

||||

defer wlk.Close()

|

||||

```

|

||||

|

||||

Now from the above snippet the following code one can notice that until the GetObject() returns writing to the client. Following portion of the code will block.

|

||||

|

||||

```go

|

||||

wlk, err := fs.rwPool.Create(fsMetaPath)

|

||||

```

|

||||

|

||||

This restriction is needed so that corrupted data is not returned to the client in between I/O. The logic works vice-versa as well an on-going PutObject(), GetObject() would wait for the PutObject() to complete.

|

||||

|

||||

### Caveats (concurrency)

|

||||

|

||||

Consider for example 3 servers sharing the same backend

|

||||

|

||||

On minio1

|

||||

|

||||

* DeleteObject(object1) --> lock acquired on `fs.json` while object1 is being deleted.

|

||||

|

||||

On minio2

|

||||

|

||||

* PutObject(object1) --> lock waiting until DeleteObject finishes.

|

||||

|

||||

On minio3

|

||||

|

||||

* PutObject(object1) --> (concurrent request during PutObject minio2 checking if `fs.json` exists)

|

||||

|

||||

Once lock is acquired the minio2 validates if the file really exists to avoid obtaining lock on an fd which is already deleted. But this situation calls for a race with a third server which is also attempting to write the same file before the minio2 can validate if the file exists. It might be potentially possible `fs.json` is created so the lock acquired by minio2 might be invalid and can lead to a potential inconsistency.

|

||||

|

||||

This is a known problem and cannot be solved by POSIX fcntl locks. These are considered to be the limits of shared filesystem.

|

||||

@@ -1,70 +0,0 @@

|

||||

# Shared Backend MinIO Quickstart Guide [](https://slack.min.io) [](https://hub.docker.com/r/minio/minio/)

|

||||

|

||||

MinIO shared mode lets you use single [NAS](https://en.wikipedia.org/wiki/Network-attached_storage) (like NFS, GlusterFS, and other

|

||||

distributed filesystems) as the storage backend for multiple MinIO servers. Synchronization among MinIO servers is taken care by design.

|

||||

Read more about the MinIO shared mode design [here](https://github.com/minio/minio/blob/master/docs/shared-backend/DESIGN.md).

|

||||

|

||||

MinIO shared mode is developed to solve several real world use cases, without any special configuration changes. Some of these are

|

||||

|

||||

- You have already invested in NAS and would like to use MinIO to add S3 compatibility to your storage tier.

|

||||

- You need to use NAS with an S3 interface due to your application architecture requirements.

|

||||

- You expect huge traffic and need a load balanced S3 compatible server, serving files from a single NAS backend.

|

||||

|

||||

With a proxy running in front of multiple, shared mode MinIO servers, it is very easy to create a Highly Available, load balanced, AWS S3 compatible storage system.

|

||||

|

||||

## Get started

|

||||

|

||||

If you're aware of stand-alone MinIO set up, the installation and running remains the same.

|

||||

|

||||

## 1. Prerequisites

|

||||

|

||||

Install MinIO - [MinIO Quickstart Guide](https://min.io/docs/minio/linux/index.html#quickstart-for-linux).

|

||||

|

||||

## 2. Run MinIO on Shared Backend

|

||||

|

||||

To run MinIO shared backend instances, you need to start multiple MinIO servers pointing to the same backend storage. We'll see examples on how to do this in the following sections.

|

||||

|

||||

- All the nodes running shared MinIO need to have same access key and secret key. To achieve this, we export access key and secret key as environment variables on all the nodes before executing MinIO server command.

|

||||

- The drive paths below are for demonstration purposes only, you need to replace these with the actual drive paths/folders.

|

||||

|

||||

### MinIO shared mode on Ubuntu 16.04 LTS

|

||||

|

||||

You'll need the path to the shared volume, e.g. `/path/to/nfs-volume`. Then run the following commands on all the nodes you'd like to launch MinIO.

|

||||

|

||||

```sh

|

||||

export MINIO_ROOT_USER=<ACCESS_KEY>

|

||||

export MINIO_ROOT_PASSWORD=<SECRET_KEY>

|

||||

minio gateway nas /path/to/nfs-volume

|

||||

```

|

||||

|

||||

### MinIO shared mode on Windows 2012 Server

|

||||

|

||||

You'll need the path to the shared volume, e.g. `\\remote-server\smb`. Then run the following commands on all the nodes you'd like to launch MinIO.

|

||||

|

||||

```cmd

|

||||

set MINIO_ROOT_USER=my-username

|

||||

set MINIO_ROOT_PASSWORD=my-password

|

||||

minio.exe gateway nas \\remote-server\smb\export

|

||||

```

|

||||

|

||||

### Windows Tip

|

||||

|

||||

If a remote volume, e.g. `\\remote-server\smb` is mounted as a drive, e.g. `M:\`. You can use [`net use`](https://technet.microsoft.com/en-us/library/bb490717.aspx) command to map the drive to a folder.

|

||||

|

||||

```cmd

|

||||

set MINIO_ROOT_USER=my-username

|

||||

set MINIO_ROOT_PASSWORD=my-password

|

||||

net use m: \\remote-server\smb\export /P:Yes

|

||||

minio.exe gateway nas M:\export

|

||||

```

|

||||

|

||||

## 3. Test your setup

|

||||

|

||||

To test this setup, access the MinIO server via browser or [`mc`](https://min.io/docs/minio/linux/reference/minio-mc.html#quickstart). You’ll see the uploaded files are accessible from the all the MinIO shared backend endpoints.

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [Use `mc` with MinIO Server](https://min.io/docs/minio/linux/reference/minio-mc.html)

|

||||

- [Use `aws-cli` with MinIO Server](https://min.io/docs/minio/linux/integrations/aws-cli-with-minio.html)

|

||||

- [Use `minio-go` SDK with MinIO Server](https://min.io/docs/minio/linux/developers/go/minio-go.html)

|

||||

- [The MinIO documentation website](https://min.io/docs/minio/linux/index.html)

|

||||

@@ -42,7 +42,7 @@ In this document we will explain in detail on how to configure all the prerequis

|

||||

### Prerequisites

|

||||

|

||||

- [Configuring keycloak](https://github.com/minio/minio/blob/master/docs/sts/keycloak.md) or [Configuring Casdoor](https://github.com/minio/minio/blob/master/docs/sts/casdoor.md)

|

||||

- [Configuring etcd (optional needed only in gateway or federation mode)](https://github.com/minio/minio/blob/master/docs/sts/etcd.md)

|

||||

- [Configuring etcd](https://github.com/minio/minio/blob/master/docs/sts/etcd.md)

|

||||

|

||||

### Setup MinIO with Identity Provider

|

||||

|

||||

@@ -68,21 +68,6 @@ export MINIO_IDENTITY_OPENID_CLIENT_ID="843351d4-1080-11ea-aa20-271ecba3924a"

|

||||

minio server /mnt/data

|

||||

```

|

||||

|

||||

### Setup MinIO Gateway with Keycloak and Etcd

|

||||

|

||||

Make sure we have followed the previous step and configured each software independently, once done we can now proceed to use MinIO STS API and MinIO gateway to use these credentials to perform object API operations.

|

||||

|

||||

> NOTE: MinIO gateway requires etcd to be configured to use STS API.

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=aws_access_key

|

||||

export MINIO_ROOT_PASSWORD=aws_secret_key

|

||||

export MINIO_IDENTITY_OPENID_CONFIG_URL=http://localhost:8080/auth/realms/demo/.well-known/openid-configuration

|

||||

export MINIO_IDENTITY_OPENID_CLIENT_ID="843351d4-1080-11ea-aa20-271ecba3924a"

|

||||

export MINIO_ETCD_ENDPOINTS=http://localhost:2379

|

||||

minio gateway s3

|

||||

```

|

||||

|

||||

### Using WebIdentiy API

|

||||

|

||||

On another terminal run `web-identity.go` a sample client application which obtains JWT id_tokens from an identity provider, in our case its Keycloak. Uses the returned id_token response to get new temporary credentials from the MinIO server using the STS API call `AssumeRoleWithWebIdentity`.

|

||||

|

||||

Reference in New Issue

Block a user